Written in April last year, my procedure to move the Peoplesoft appliance to VMWare ESXi – see here – seems to be broken since image #6… My test was on image #3…

One of the reader found its own way and kindly shared experience, you can find here how to work around.

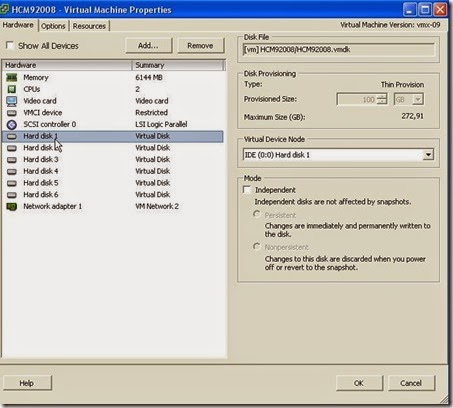

Right now we are on image #8, the first one ever on Peopletools 8.54. Time to have a shot.

1. I decided first to follow my own procedure and see.

1.1 OVF changes

First, the ovf file setting changes, very few are needed compared to last year :

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# diff HCMDB-SES-854-01.ovf HCMDB-SES-854-01.ovf.tmp

34c34

< <OperatingSystemSection ovf:id="109">

---

> <OperatingSystemSection ovf:id="101">

36,37c36,37

< <Description>Oracle_64</Description>

< <vbox:OSType ovf:required="false">Oracle_64</vbox:OSType>

---

> <Description>oracleLinux64Guest</Description>

> <vbox:OSType ovf:required="false">oracleLinux64Guest</vbox:OSType>

45c45

< <vssd:VirtualSystemType>virtualbox-2.2</vssd:VirtualSystemType>

---

> <vssd:VirtualSystemType>vmx-07</vssd:VirtualSystemType>

Nothing else.

1.2 ovftool control file

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# echo "lax" > .ovftool

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# echo "datastore=vm" >> .ovftool

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# echo "skipManifestCheck" >> .ovftool

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# echo "overwrite" >> .ovftool

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# echo "powerOffTarget" >> .ovftool

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# echo "net:HostOnly=VM Network 2" >> .ovftool

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# echo "annotation=Peopletools 8.54">> .ovftool

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# echo "name=HCM92008" >> .ovftool

1.3 Push to ESXi

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# ovftool --version

VMware ovftool 3.5.0 (build-1274719)

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# ovftool HCMDB-SES-854-01.ovf vi://root:pwd@192.168.1.10:443

Opening OVF source: HCMDB-SES-854-01.ovf

Opening VI target: vi://root@192.168.1.10:443/

Deploying to VI: vi://root@192.168.1.10:443/

Transfer Completed

Warning:

- Wrong file size specified in OVF descriptor for 'HCMDB-SES-854-01-disk1.vmdk' (specified: -1, actual 2258674176).

- Wrong file size specified in OVF descriptor for 'HCMDB-SES-854-01-disk2.vmdk' (specified: -1, actual 3552615424).

- Wrong file size specified in OVF descriptor for 'HCMDB-SES-854-01-disk3.vmdk' (specified: -1, actual 8894975488).

- Wrong file size specified in OVF descriptor for 'HCMDB-SES-854-01-disk4.vmdk' (specified: -1, actual 7227696640).

- Wrong file size specified in OVF descriptor for 'HCMDB-SES-854-01-disk5.vmdk' (specified: -1, actual 144896).

- Wrong file size specified in OVF descriptor for 'HCMDB-SES-854-01-disk6.vmdk' (specified: -1, actual 15863050240).

Completed successfully

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]#

Those warnings – which were not here last year on previous images- are really scary. If, like me you want to get rid off these, modify the ovf file.

First check the vmdk files size on disk:

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# ls -l *vmdk

-rw------- 1 root root 2258674176 Aug 12 08:30 HCMDB-SES-854-01-disk1.vmdk

-rw------- 1 root root 3552615424 Aug 12 08:35 HCMDB-SES-854-01-disk2.vmdk

-rw------- 1 root root 8894975488 Aug 12 08:53 HCMDB-SES-854-01-disk3.vmdk

-rw------- 1 root root 7227696640 Aug 12 09:04 HCMDB-SES-854-01-disk4.vmdk

-rw------- 1 root root 144896 Aug 12 09:04 HCMDB-SES-854-01-disk5.vmdk

-rw------- 1 root root 15863050240 Aug 12 09:24 HCMDB-SES-854-01-disk6.vmdk

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]#

Take all the size and add them into ovf file (ovf:size=…):

<File ovf:href="HCMDB-SES-854-01-disk1.vmdk" ovf:id="file1" ovf:size="2258674176"/>

<File ovf:href="HCMDB-SES-854-01-disk2.vmdk" ovf:id="file2" ovf:size="3552615424"/>

<File ovf:href="HCMDB-SES-854-01-disk3.vmdk" ovf:id="file3" ovf:size="8894975488"/>

<File ovf:href="HCMDB-SES-854-01-disk4.vmdk" ovf:id="file4" ovf:size="7227696640"/>

<File ovf:href="HCMDB-SES-854-01-disk5.vmdk" ovf:id="file5" ovf:size="144896"/>

<File ovf:href="HCMDB-SES-854-01-disk6.vmdk" ovf:id="file6" ovf:size="15863050240"/>

That’s it for the warning.

1.4 Start the VM

Unfortunately, despite ovftool specified a successful work, it’s not possible to boot the new VM. It systematically tries to boot from within the network card, ignoring all the hard drives. And of course, it fails.

2. The systems’ disks are broken.

In the link of the other blog I mentioned above, someone found a way by setting the disk capacity to a dummy size, say 98Gb (see the comment). Using the option “diskMode=thin” of the ovftool properties, it should not be a problem in space. And it works.

2.1 The new ovf

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# diff HCMDB-SES-854-01.ovf HCMDB-SES-854-01.ovf.orig

4,9c4,9

< <File ovf:href="HCMDB-SES-854-01-disk1.vmdk" ovf:id="file1" ovf:size="2258674176"/>

< <File ovf:href="HCMDB-SES-854-01-disk2.vmdk" ovf:id="file2" ovf:size="3552615424"/>

< <File ovf:href="HCMDB-SES-854-01-disk3.vmdk" ovf:id="file3" ovf:size="8894975488"/>

< <File ovf:href="HCMDB-SES-854-01-disk4.vmdk" ovf:id="file4" ovf:size="7227696640"/>

< <File ovf:href="HCMDB-SES-854-01-disk5.vmdk" ovf:id="file5" ovf:size="144896"/>

< <File ovf:href="HCMDB-SES-854-01-disk6.vmdk" ovf:id="file6" ovf:size="15863050240"/>

---

> <File ovf:href="HCMDB-SES-854-01-disk1.vmdk" ovf:id="file1"/>

> <File ovf:href="HCMDB-SES-854-01-disk2.vmdk" ovf:id="file2"/>

> <File ovf:href="HCMDB-SES-854-01-disk3.vmdk" ovf:id="file3"/>

> <File ovf:href="HCMDB-SES-854-01-disk4.vmdk" ovf:id="file4"/>

> <File ovf:href="HCMDB-SES-854-01-disk5.vmdk" ovf:id="file5"/>

> <File ovf:href="HCMDB-SES-854-01-disk6.vmdk" ovf:id="file6"/>

13,18c13,18

< <Disk ovf:capacity="107374182400" ovf:diskId="vmdisk1" ovf:fileRef="file1" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="34649586-c43a-4df3-af9c-2445fd543fdf"/>

< <Disk ovf:capacity="107374182400" ovf:diskId="vmdisk2" ovf:fileRef="file2" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="f108476c-e701-49f8-b8f0-f6a2b4d6c4d5"/>

< <Disk ovf:capacity="107374182400" ovf:diskId="vmdisk3" ovf:fileRef="file3" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="6ca0b490-9258-4643-888c-1b0722199fe5"/>

< <Disk ovf:capacity="107374182400" ovf:diskId="vmdisk4" ovf:fileRef="file4" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="1a394121-3721-4d20-8c09-cd4f9b7f2053"/>

< <Disk ovf:capacity="107374182400" ovf:diskId="vmdisk5" ovf:fileRef="file5" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="3bbbc232-080d-4536-916c-262fe5b2d379"/>

< <Disk ovf:capacity="107374182400" ovf:diskId="vmdisk6" ovf:fileRef="file6" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="696b9eac-bbe8-4e49-84c2-c0c5d8b84480"/>

---

> <Disk ovf:capacity="10092418560" ovf:diskId="vmdisk1" ovf:fileRef="file1" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="34649586-c43a-4df3-af9c-2445fd543fdf"/>

> <Disk ovf:capacity="5782371840" ovf:diskId="vmdisk2" ovf:fileRef="file2" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="f108476c-e701-49f8-b8f0-f6a2b4d6c4d5"/>

> <Disk ovf:capacity="41957153280" ovf:diskId="vmdisk3" ovf:fileRef="file3" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="6ca0b490-9258-4643-888c-1b0722199fe5"/>

> <Disk ovf:capacity="15743185920" ovf:diskId="vmdisk4" ovf:fileRef="file4" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="1a394121-3721-4d20-8c09-cd4f9b7f2053"/>

> <Disk ovf:capacity="24675840" ovf:diskId="vmdisk5" ovf:fileRef="file5" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="3bbbc232-080d-4536-916c-262fe5b2d379"/>

> <Disk ovf:capacity="27793221120" ovf:diskId="vmdisk6" ovf:fileRef="file6" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="696b9eac-bbe8-4e49-84c2-c0c5d8b84480"/>

34c34

< <OperatingSystemSection ovf:id="101">

---

> <OperatingSystemSection ovf:id="109">

36,37c36,37

< <Description>oracleLinux64Guest</Description>

< <vbox:OSType ovf:required="false">oracleLinux64Guest</vbox:OSType>

---

> <Description>Oracle_64</Description>

> <vbox:OSType ovf:required="false">Oracle_64</vbox:OSType>

45c45

< <vssd:VirtualSystemType>vmx-09</vssd:VirtualSystemType>

---

> <vssd:VirtualSystemType>virtualbox-2.2</vssd:VirtualSystemType>

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]#

2.2 The ovftool file content (thin option is used)

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# cat .ovftool

lax

datastore=vm

skipManifestCheck

overwrite

powerOffTarget

net:HostOnly=VM Network 2

diskMode=thin

annotation=HCM9.2 - Peopletools 8.54.01

name=HCM92008

2.3 Push to ESXi

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# ovftool HCMDB-SES-854-01.ovf vi://root:pwd@192.168.1.10:443

Opening OVF source: HCMDB-SES-854-01.ovf

Opening VI target: vi://root@192.168.1.10:443/

Deleting VM: HCM92008

Deploying to VI: vi://root@192.168.1.10:443/

Transfer Completed

Completed successfully

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]#

2.4 Boot of the new VM

Then you are back to the business and prompted to setup your brand new VM.

3. Capacity from vmdk file

Setting a larger value than needed is fine but a bit hazardous.

Looking in the vmdk file, we also can see the real disk capacity of the target. For instance, here for the disk1:

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# cat HCMDB-SES-854-01-disk1.vmdk|more

KDMV

version=1

CID=aa3d0432

parentCID=ffffffff

createType="streamOptimized"

# Extent description

RDONLY 19711755 SPARSE "HCMDB-SES-854-01-disk1.vmdk"

The size is in Kb, so it should be 19711755*1024=20184837120.

”Surprisingly”, it’s exactly the double of the specified capacity in the original ovf file for the disk1. And the same is true for all the other disks.

Using that size’s capacity is also a failure… Same as above, it’s booting from within the network.

4. Size from vmdk disk

WMWare is coming with a small tool, vmware-mount (version 5.1). Very useful in our case.

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# for i in `ls |grep vmdk`

> do

> echo $i

> vmware-mount -p $i

> done

HCMDB-SES-854-01-disk1.vmdk

Nr Start Size Type Id Sytem

-- ---------- ---------- ---- -- ------------------------

1 63 530082 BIOS 83 Linux

2 530145 10779615 BIOS 83 Linux

3 11309760 8401995 BIOS 82 Linux swap

HCMDB-SES-854-01-disk2.vmdk

Nr Start Size Type Id Sytem

-- ---------- ---------- ---- -- ------------------------

1 63 11293632 BIOS 83 Linux

HCMDB-SES-854-01-disk3.vmdk

Nr Start Size Type Id Sytem

-- ---------- ---------- ---- -- ------------------------

1 63 81947502 BIOS 83 Linux

HCMDB-SES-854-01-disk4.vmdk

Nr Start Size Type Id Sytem

-- ---------- ---------- ---- -- ------------------------

1 63 30748347 BIOS 83 Linux

HCMDB-SES-854-01-disk5.vmdk

Nr Start Size Type Id Sytem

-- ---------- ---------- ---- -- ------------------------

1 63 48132 BIOS 83 Linux

HCMDB-SES-854-01-disk6.vmdk

Nr Start Size Type Id Sytem

-- ---------- ---------- ---- -- ------------------------

1 63 54283572 BIOS 83 Linux

The size is given in Kb.

Let’s take the first, (530082+10779615+8401995)*1024=20184772608

Ok, we are not that far from the double of the original size (from vmdk, see above), are we ? See.

20184837120 (original capacity) – 20184772608 = 64512. And 64512 is nothing but 63 (start) *1024…

Verifying on the second drive:

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# cat HCMDB-SES-854-01-disk2.vmdk|more

KDMV

version=1

CID=8bdea51b

parentCID=ffffffff

createType="streamOptimized"

# Extent description

RDONLY 11293695 SPARSE "HCMDB-SES-854-01-disk2.vmdk"

11293695 * 1024 = 11564743680 (in other word original capacity*2, 5782371840*2).

Then, from the last output of vmware-mount, we have 11293632*1024=11564679168.

11564743680 - 11564679168 = 64512. Again.

So, I think we are safe to say the required capacity should be the double of the initial capacity minus 64512.

5. Let’s have a new try with this hypothesis.

5.1 New ovf

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# diff HCMDB-SES-854-01.ovf HCMDB-SES-854-01.ovf.orig

4,9c4,9

< <File ovf:href="HCMDB-SES-854-01-disk1.vmdk" ovf:id="file1" ovf:size="2258674176"/>

< <File ovf:href="HCMDB-SES-854-01-disk2.vmdk" ovf:id="file2" ovf:size="3552615424"/>

< <File ovf:href="HCMDB-SES-854-01-disk3.vmdk" ovf:id="file3" ovf:size="8894975488"/>

< <File ovf:href="HCMDB-SES-854-01-disk4.vmdk" ovf:id="file4" ovf:size="7227696640"/>

< <File ovf:href="HCMDB-SES-854-01-disk5.vmdk" ovf:id="file5" ovf:size="144896"/>

< <File ovf:href="HCMDB-SES-854-01-disk6.vmdk" ovf:id="file6" ovf:size="15863050240"/>

---

> <File ovf:href="HCMDB-SES-854-01-disk1.vmdk" ovf:id="file1"/>

> <File ovf:href="HCMDB-SES-854-01-disk2.vmdk" ovf:id="file2"/>

> <File ovf:href="HCMDB-SES-854-01-disk3.vmdk" ovf:id="file3"/>

> <File ovf:href="HCMDB-SES-854-01-disk4.vmdk" ovf:id="file4"/>

> <File ovf:href="HCMDB-SES-854-01-disk5.vmdk" ovf:id="file5"/>

> <File ovf:href="HCMDB-SES-854-01-disk6.vmdk" ovf:id="file6"/>

13,18c13,18

< <Disk ovf:capacity="20184772608" ovf:diskId="vmdisk1" ovf:fileRef="file1" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="34649586-c43a-4df3-af9c-2445fd543fdf"/>

< <Disk ovf:capacity="11564679168" ovf:diskId="vmdisk2" ovf:fileRef="file2" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="f108476c-e701-49f8-b8f0-f6a2b4d6c4d5"/>

< <Disk ovf:capacity="83914242048" ovf:diskId="vmdisk3" ovf:fileRef="file3" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="6ca0b490-9258-4643-888c-1b0722199fe5"/>

< <Disk ovf:capacity="31486307328" ovf:diskId="vmdisk4" ovf:fileRef="file4" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="1a394121-3721-4d20-8c09-cd4f9b7f2053"/>

< <Disk ovf:capacity="49287168" ovf:diskId="vmdisk5" ovf:fileRef="file5" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="3bbbc232-080d-4536-916c-262fe5b2d379"/>

< <Disk ovf:capacity="55586377728" ovf:diskId="vmdisk6" ovf:fileRef="file6" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="696b9eac-bbe8-4e49-84c2-c0c5d8b84480"/>

---

> <Disk ovf:capacity="10092418560" ovf:diskId="vmdisk1" ovf:fileRef="file1" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="34649586-c43a-4df3-af9c-2445fd543fdf"/>

> <Disk ovf:capacity="5782371840" ovf:diskId="vmdisk2" ovf:fileRef="file2" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="f108476c-e701-49f8-b8f0-f6a2b4d6c4d5"/>

> <Disk ovf:capacity="41957153280" ovf:diskId="vmdisk3" ovf:fileRef="file3" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="6ca0b490-9258-4643-888c-1b0722199fe5"/>

> <Disk ovf:capacity="15743185920" ovf:diskId="vmdisk4" ovf:fileRef="file4" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="1a394121-3721-4d20-8c09-cd4f9b7f2053"/>

> <Disk ovf:capacity="24675840" ovf:diskId="vmdisk5" ovf:fileRef="file5" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="3bbbc232-080d-4536-916c-262fe5b2d379"/>

> <Disk ovf:capacity="27793221120" ovf:diskId="vmdisk6" ovf:fileRef="file6" ovf:format="http://www.vmware.com/interfaces/specifications/vmdk.html#streamOptimized" vbox:uuid="696b9eac-bbe8-4e49-84c2-c0c5d8b84480"/>

34c34

< <OperatingSystemSection ovf:id="101">

---

> <OperatingSystemSection ovf:id="109">

36,37c36,37

< <Description>oracleLinux64Guest</Description>

< <vbox:OSType ovf:required="false">oracleLinux64Guest</vbox:OSType>

---

> <Description>Oracle_64</Description>

> <vbox:OSType ovf:required="false">Oracle_64</vbox:OSType>

45c45

< <vssd:VirtualSystemType>vmx-09</vssd:VirtualSystemType>

---

> <vssd:VirtualSystemType>virtualbox-2.2</vssd:VirtualSystemType>

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]#

5.2 ovftool control file

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# cat .ovftool

lax

datastore=vm

skipManifestCheck

overwrite

powerOffTarget

net:HostOnly=VM Network 2

diskMode=thin

annotation=HCM9.2 - Peopletools 8.54.01

name=HCM92008

5.3 Push to ESXi

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]# ovftool HCMDB-SES-854-01.ovf vi://root:pwd@192.168.1.10:443

Opening OVF source: HCMDB-SES-854-01.ovf

Opening VI target: vi://root@192.168.1.10:443/

Deploying to VI: vi://root@192.168.1.10:443/

Transfer Completed

Completed successfully

[root@omsa:/nfs/software/PeopleSoftCD/OVA/HCM-920-UPD-008_OVA]#

5.4 Booting VM

The new VM starts as expected. Back to normal.

Ok, I hope it is clear, briefly speaking, in the ovf file change the capacity as (capacity*2)-64512 for each file.

Successfully tested on HCM92008 and FSCM92008 images.

Nicolas.

1 comment:

I just got finished importing the HCM-920-UPD-015.ova version of this into an ESX 5.5 environment, and can add a bit more information to your notes.

First-off, a few of your assumptions were incorrect, which results in the final conclusion also being a bit off. Notably, many of the places where you thought sizes were being measured/displayed in KiB (eg. point 3 about capacity from the VMDK file) that size is measured in blocks, not KiBs. A block being 512 bytes, explains why you kept seeing sizes that were double what the original OVF said the file should be.

However after a bunch of experimentation I finally found out what the correct sizes should be for the VMDK files - using the 'qemu-img' tool to dump the metadata from the VMDK files gives the correct (minimum) sizes that can be set for the disks.

It turns out that the VMDK's are using a 64KiB chunk size, but that for some reason I can't explain (possibly related to the fact that they are compressed) the size of them is not a multiple of 64KiB. Rounding the capacity numbers up to the next multiple of 65536 solves the problem. In my case the original capacity from the OVF file for disk 1 was 10355627520 bytes, but resulted in a VM that would only try/fail to boot from network. Increasing the capacity of that disk to 10355671040 bytes (a 43520 byte increase) gives me a VM that boots properly.

And so the simplest way to get the correct capacity numbers would be to take the original capacity, divide by 65536 (1024 * 64 aka 64KiB) to get the number of chunks, round that number up to the next whole number (for my example disk above the math gave me 158014.34.... chunks, so round to 158015), and then multiply by 65536 to convert back to bytes again.

Post a Comment